Hi Pau,

I’ve already got pagination set up and working, that’s not the issue here.

There’s a limit somewhere on Prismic’s side that’s causing the query to return incomplete results. It’s not in the documentation and it breaks the pagination example you shared so I can only assume it’s a bug.

Pagination in Prismic uses hasNextPage to decide whether there are still more documents to fetch. This code snippet is taken from AllPostsLoader.js in the react-graphql-pagination-example project.

But after retrieving the first 100 documents from my query hasNextPage is false meaning the script won’t trigger another query.

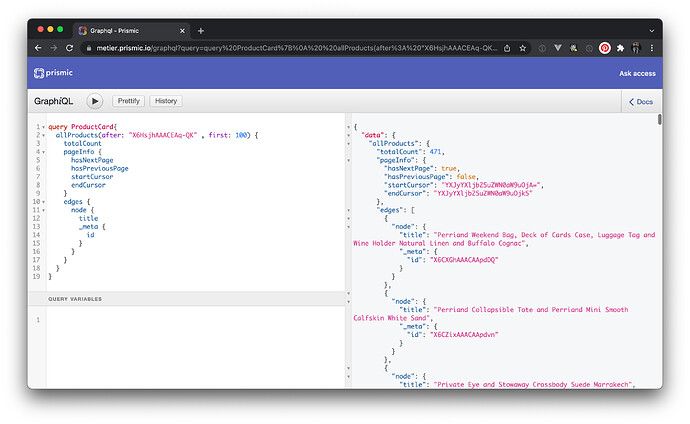

I’ve tried querying the first 99 results, taking the endCursor from that result and passing it in as the after variable for the next query and it only returns 1 document. So totalCount returns 100 and hasNextPage is false after retrieving 100 documents.

It’s clear that only 100 documents are being returned and I can’t retrieve any more using pagination.

Please could you test using the info I’ve provided for our repo (metier.prisimic.io)? It’s easy to spot it happening in the graphQL explorer with these steps.

- Run the query and you’ll see

totalCount is 100 and hasNextPage is false.

- Delete several ids from the variables array and rerun the query: you’ll see

totalCount is still 100 and hasNextPage is false.

So that means that valid document ids after the first 100 are being ignored. The first query should have returned totalCount = 157 and hasNextPage = true meaning I could use pagination to retrieve the remaining documents.

Either there’s a limit on the size of variable array that can be submitted or there’s a limit being imposed on the documents that are returned.

query ProductCard($ids: [String!]) {

allProducts(id_in: $ids, first: 100) {

totalCount

pageInfo {

hasNextPage

hasPreviousPage

startCursor

endCursor

}

edges {

node {

title

}

}

}

}

{

"ids": ["X6CGThAAACAApYU9","YT--lhEAACAAoT_h","X6CIdBAAACAApY7l","X6CI5hAAAB4ApZDw","X6CIBhAAACIApYzy","X6CGsxAAACAApYcA","X6CC7xAAACIApXYp","YT_A-BEAACAAoUqj","X6CENRAAACAApXvV","X6CD6xAAAB4ApXqN","YbsNYBIAACIAHhST","YZzFPBAAACEAkrSJ","X6BOoxAAACEApIlT","YFxrThIAACIAhXc4","YKpe3hEAACIAiwND","YZrUiBAAACEAigNB","YFxodxIAACIAhWpV","YZzGmBAAACEAkrrS","YFxjHBIAACIAhVJR","YFxnexIAACEAhWXU","X6CiLxAAACAApgNR","X6CiyhAAACAApgYb","X6Ci-RAAACIApgby","X6CiYRAAACEApgQz","X6Ch_hAAACIApgJ1","X6ChrBAAACIApgD_","X6ChMhAAACEApf7X","YZzEgxAAACQAkrEz","X6CMKxAAACIApZ-3","X6CMrxAAACIApaIX","YbsO1BIAACAAHhcW","X37wsRUAACgAkfc3","X6CNKRAAACAApaQ9","YT_CvREAAERtoVKG","X37wfxUAACkAkfZD","X6CQbhAAACAApbKD","X37tcxUAACkAkegn","X37vSxUAACkAkfCy","YGSHkhIAACIAp9q8","YZ52NhAAACQAqRwU","X6CRHRAAACAApbWo","X6CRXBAAACAApbbM","X6CSWxAAACIApbtM","X6CSrhAAACAApbzK","X6CS9hAAACEApb4R","YZ5-FhAAACEAqUA0","X6CV3RAAACAApcso","X6CWIxAAACEApcxn","X6CWyBAAACIApc9b","YAYfMxAAACUAQ0h2","X6CkpRAAACEApg6D","X6CjyRAAACEApgqa","X6Ck2hAAACAApg9x","YF3nLBIAACEAi_3w","X6ClPxAAAB4AphEB","YOTtwxIAAGkettny","YT_HgREAACAAoWe3","YOTouRIAACIAtsOV","X6FJDBAAAB4AqPsh","X0_MhRAAAGYIRNMN","X6Cc0hAAACAApese","X6CbahAAACAApeSm","X6CbJxAAACEApeNp","X6CfJxAAAB4ApfWN","X6CfdxAAACEApfb0","X19rixEAACMAd86Y","X6Ce3BAAACAApfRD","X9C-8xYAACoA2pzZ","X9DEmxYAACoA2raF","YZ2JXhAAACIAlgDI","YZ4fwxAAACIAmJ9F","YZ4gtRAAACIAmKOV","YZ4iZhAAACQAmKsm","YG9WJBMAACIAi2k5","YJ8TIBAAACEA_h9G","YG9VNRMAACAAi2Ts","YG9UWxMAACIAi2D7","YG9XKxMAACMAi24T","YG9WtBMAACAAi2vd","X6HsjhAAACEAq-QK","X6HrrxAAAB4Aq-Am","X6HsPRAAACAAq-Ki","X6HquRAAACAAq9vO","YG9q8BMAACEAi8ig","YG9psRMAACMAi8KK","YG9qPxMAACMAi8V6","YG9o6xMAACIAi75r","YG9nWBMAACMAi7dL","YG9oaxMAACIAi7wg","YG9J-hMAACAAizEi","X6KnJxAAACIAry0i","X6KmyxAAACEAryt4","YYB0GhEAAB8ARGjI","X6PnfRMAACIA4PY9","X6PnNRMAACMA4PT0","YZ4MPRAAACMAmElD","YZ4PTRAAACIAmFTR","X6PpcRMAACQA4P9l","YZ4IVxAAACEAmDc-","X6PqcBMAACQA4QQb","X6Pp8RMAACMA4QHD","X6PqNRMAACIA4QMF","YZ4y-xAAACIAmPdI","YZ4zaRAAACIAmPlL","X6PoNxMAACMA4Pmr","X6Pn_RMAACEA4PiX","YZvYkxAAACMAjpD2","YZvZOhAAACIAjpP6","X6HnkRAAAB4Aq811","X6HichAAACIAq7Y_","X6HjVhAAACEAq7pJ","X6HfmRAAACEAq6m-","X6Hf2xAAAB4Aq6ro","X6HjphAAACIAq7u2","X6HmYRAAACIAq8gk","X6HmKRAAACAAq8ce","YVZLVxEAACIAZ6BP","X6Hb_BAAACIAq5l9","YZrlNRAAACEAikzI","YZrglRAAACMAijgs","YZrd5BAAACEAiiw3","YZrfuRAAACMAijRe","YZrhJBAAACQAijqp","YN2lkhAAACIALJ4x","YN2m3RAAACEALKGt","YWA1rhAAACUAie8r","YWA2aRAAACMAifJw","YWA1CxAAACMAiexV","YWA0ZRAAACUAiel3","YWAzexAAACUAieVY","YWAyrRAAACQAieHj","X9Va7BYAACsA7sCN","X6KbQhAAACIArveG","YNx_MhIAACEAya7G","X6KezhAAACEArwgO","X6KfpxAAAB4Arwv4","X6KZ_BAAAB4ArvFl","YNuNXRIAAI8SxYW-","YN2bCBAAACIALHg7","YN2dQxAAACAALIIH","X6Kd0xAAAB4ArwOF","X6KemxAAAB4Arwce","X6KfWxAAACIArwqd","X6KNYxAAACEArrUk","X9VgFhYAACoA7tdn","X9VfUBYAACoA7tP3","X_GxnxAAAMJH6tWg","X_GwaxAAACUA6tA9","YP7_8xAAACMApIeC","YN21exAAACAALNPo","X_GtsBAAACUA6sQZ","YZ2BZBAAACQAldzy","X6KozhAAACAArzTG","X6KpmxAAACIArzh6","X6KpBRAAACIArzW_","X6KpOBAAACAArzan","X6KpbBAAAB4ArzeV"]

}